Coming soon (probably September 2022) are new NVIDIA graphics cards with amazing Tensor Core capabilities, but also with unbelievable power consumption:

~900W TGP - NVIDIA GeForce RTX 4090 Ti

~600W TGP - NVIDIA GeForce RTX 4090

~350W TGP - NVIDIA GeForce RTX 4080

https://wccftech.com/nvidia-geforce-rtx … rds-rumor/

Without air conditioning, which I don't have, I can't imagine an extra 600 or 900W of heat in a small room in the summer. Of course there is a choice and we can always buy an NVIDIA GeForce RTX 4080 with 350W draw, but a more economical solution in the long run is to buy an NVIDIA GeForce RTX 4090 and drop the power down to, say, 300W or 350W. This way we can probably get an efficient graphics card in the sense of performance to energy consumed.

In addition, I wonder about passive cooling of such a card. Today it is possible to passively cool a graphics card up to 250W, although I believe that with some modifications it will be possible to reach up to 300W, especially if the card itself has a design suitable for 600W.

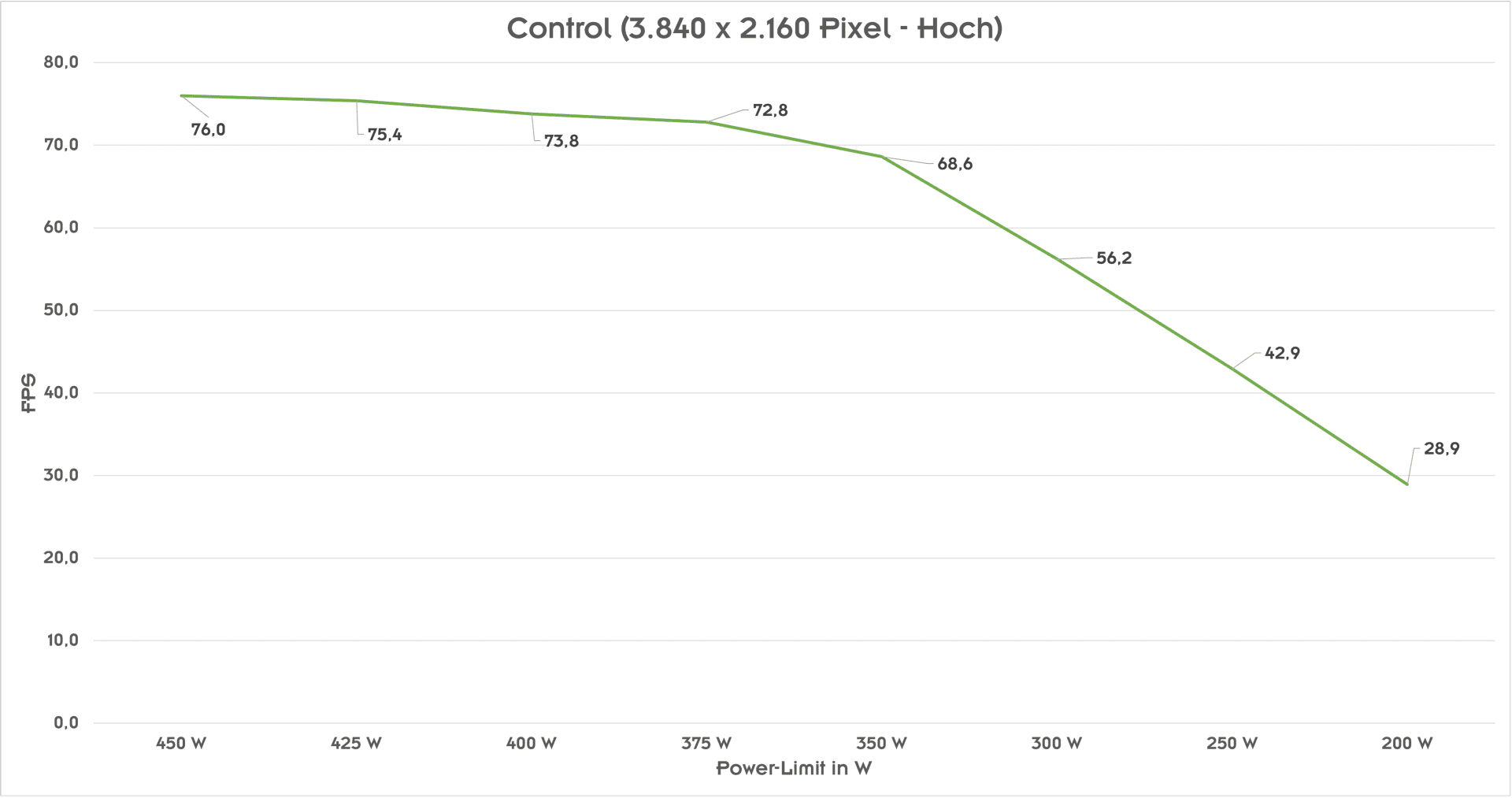

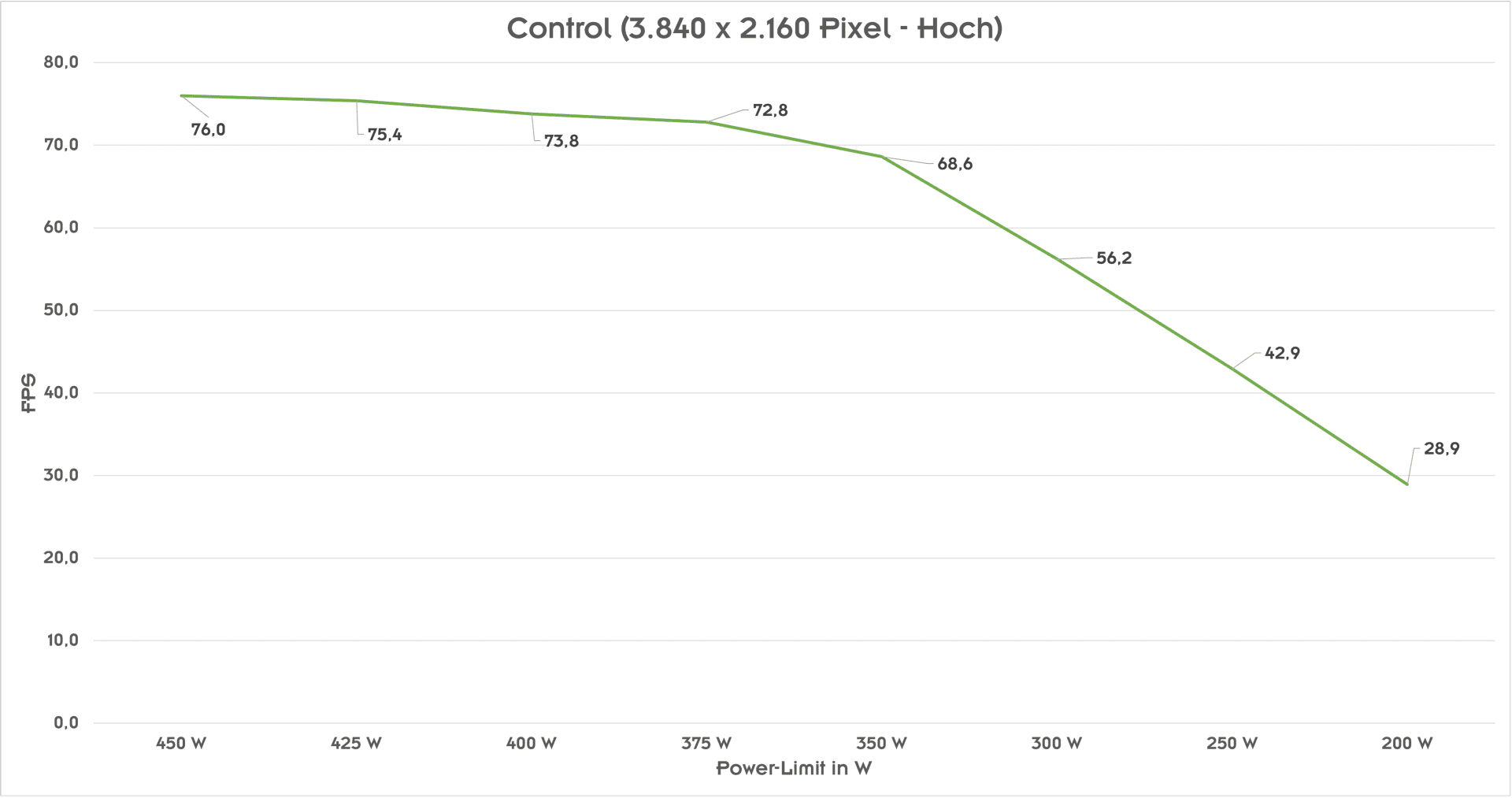

Until now, I believed that lowering the power consumption of a graphics card always leads to an increase in efficiency: relatively large at first then less and less.

However, I have now found some tests that confirm this, but also show that once the power consumption is lowered below a certain level, the efficiency starts to drop dramatically. The tests indicate that this depends not so much on the architecture of the specific graphics card, but mainly on the software used for testing. This can be seen in the graphs below:

Hardware: Inno3D GeForce RTX 3090 Ti X3 OC

Software: Control

https://www.hardwareluxx.de/index.php/a … l?start=23

Hardware: MSI GeForce RTX 3090 Gaming X Trio

Software: Heaven Benchmark

https://www.forum-3dcenter.org/vbulleti … st12963231

I must admit that these results worry me a little, because they indicate that lowering the power consumption to 50% does not necessarily mean an increase in efficiency. The consolation is the fact that, as we can see on the graphs, a lot depends on the software. So I am very interested to see how it looks with RIFE, which uses Tensor Cores.